11.サードモデルの作成

前回の話で過去成績の活用方法が見えてきたのでサードモデルの開発に入る

モデル作成の動機¶

血統情報+過去成績を考慮したモデルの作成がしたい

的中率をあげることで、回収率をあげることを

目指すのではなく、回収率を直接あげたい

モデルの目的¶

出走馬の血統情報と産駒たちの勝率

および過去成績による持ちタイムと脚質を

追加したモデルの作成

確認したい仮説¶

血統からある程度の特徴を掴み、

レース条件ごとの脚質や持ちタイムの性質を

考慮した予想モデルができるか確認

特徴量¶

ファーストモデル+

父, 母, 母父, 母母父の馬ID+

父, 母, 母父, 母母父の産駒の勝率+

推論した脚質+過去の脚質+持ちタイム+

推論した上り3Fタイムと上り3Fまでの到達タイム

目的変数¶

着順+オッズによる勝率

11-1.下準備¶

ソースの一部は有料のものを使ってます。

同じように分析したい方は、以下の記事から入手ください。

import pathlib

import warnings

import sys

sys.path.append(".")

sys.path.append("..")

from src.model_manager.lgbm_manager import LightGBMModelManager # noqa

from src.core.meta.bet_name_meta import BetName # noqa

from src.data_manager.preprocess_tools import DataPreProcessor # noqa

from src.data_manager.data_loader import DataLoader # noqa

warnings.filterwarnings("ignore")

root_dir = pathlib.Path(".").absolute().parent

dbpath = root_dir / "data" / "keibadata.db"

start_year = 2000 # DBが持つ最古の年を指定

split_year = 2014 # 学習対象期間の開始年を指定

target_year = 2019 # テスト対象期間の開始年を指定

end_year = 2023 # テスト対象期間の終了年を指定 (当然DBに対象年のデータがあること)

# 各種インスタンスの作成

data_loader = DataLoader(

start_year,

end_year,

dbpath=dbpath # dbpathは各種環境に合わせてパスを指定してください。絶対パス推奨

)

dataPreP = DataPreProcessor(

# 今回からキャッシュ機能の追加をした。使用する場合にTrueを指定。デフォルト:True

use_cache=True,

cache_dir=pathlib.Path("./data")

)

df = data_loader.load_racedata()

dfblood = data_loader.load_horseblood()

df = dataPreP.exec_pipeline(

df,

dfblood,

blood_set=["s", "b", "bs", "bbs", "ss", "sss", "ssss", "bbbs"],

lagN=5

)

今回から前提処理として以下の4つを追加している

- 持ちタイムの追加

- 脚質情報の追加

- ペース情報の追加

- 脚質情報と持ちタイムの前5走データの追加:引数

lagNで取得する前走数を指定可能。

前提処理の話は以下をご覧ください。

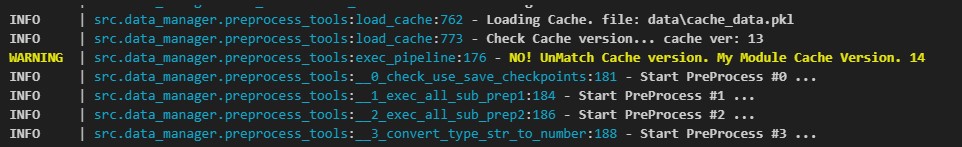

今回から前処理のキャッシュ機能を実装しました。

一度でも前処理を実行していれば、実行したNotebook配下に作成した「data」ディレクトリに「cache_data.pkl」ファイルが作成されます。

もう一度前処理を実行すると、キャッシュファイルを読み込んで処理を終了します。

キャッシュ機能を使用したくない場合は、インスタンス作成時に「use_cache」の引数で指定できます。

使用例

dataPreP = DataPreProcessor(

use_cache=True,

cache_dir=pathlib.Path("./data")

)

df = data_loader.load_racedata()

dfblood = data_loader.load_horseblood()

df = dataPreP.exec_pipeline(

df,

dfblood,

blood_set=["s", "b", "bs", "bbs", "ss", "sss", "ssss", "bbbs"],

lagN=5

)

また簡易的ですがキャッシュチェック機能もあり、前提処理の増減がある場合は再度前処理を新たに実行するようになってます

以下のように「WARNING」でキャッシュのバージョンが違うと出して、再実行してくれます。

ただし、注意点として再実行は前提処理が増える減るなどの時だけなので、前提処理の中身を変えても再実行の対象にはならないのでご注意ください。

11-2.前処理で追加された情報の確認¶

11-2-1.持ちタイムの情報¶

display(

df.columns[

df.columns.str.contains(r"^.+(?<!_lag[1-9])

quot;, regex=True) & df.columns.str.contains(r"^mochiTime", regex=True) ] )

mochiTime_orgは不要だが、それ以外の項目は必要

mochiTime: 上り3Fまでの再考速度mochiTime3F: 上り3Fの最高速度mochiTime_mean: レースごとの出走馬のmochiTimeの平均mochiTime_diff: レースごとの出走馬のmochiTimeの平均との差mochiTime3F_mean: レースごとの出走馬のmochiTime3Fの平均mochiTime3F_diff: レースごとの出走馬のmochiTime3Fの平均との差

11-2-2.脚質情報¶

display(

df.columns[

df.columns.str.contains(r"^.+(?<!_lag[1-9])

quot;, regex=True) & df.columns.str.contains(r"^cluster", regex=True) ] ) dist_columns = df.columns[df.columns.str.contains( r"cluster0_\d+

quot;)].tolist()

データリークになるので、この情報は使えない。

前走情報で使う

cluster0: クラスタ数4の脚質分類情報cluster1: クラスタ数16の脚質分類情報cluster0_n: 各レースに出走する競走馬の前5走に関するクラスタ数4の分布

11-2-3.前走情報¶

display(df.columns[df.columns.str.contains(r"_lag", regex=True)])

cluster0, cluster1, toL3F_vel, toL3F_vel_diff, last3F_vel, last3F_vel_diffの前5走分の情報

前走情報については以下の属性から取得可能

dataPreP.lag_columns

11-3.モデル作成用インスタンス作成¶

lgbm_model_manager = LightGBMModelManager(

# modelsディレクトリ配下に作成したいモデル名のフォルダパスを指定。

# フォルダパスは絶対パスにすると安全です。

root_dir / "models" / "third_model", # セカンドモデルのモデルID

split_year,

target_year,

end_year

)

# 説明変数にするカラム

feature_columns = [

'distance',

'number',

'boxNum',

# 'odds',

# 'favorite',

'age',

'jweight',

'weight',

'gl',

'race_span',

"raceGrade", # グレード情報を追加

# region 持ちタイムの情報

"mochiTime",

"mochiTime3F",

# endregion

# region 予測脚質とタイム

"pred_last3F",

"pred_toL3F",

"pred_cls"

# endregion

] + dataPreP.encoding_columns + \

dataPreP.lag_columns + \

dist_columns

# 血統情報を追加

feature_columns += ["stallionId", "breedId", "bStallionId", "b2StallionId",]

# 勝率情報の追加

feature_columns += ['winR_stallion', 'winR_breed',

'winR_bStallion', 'winR_b2Stallion']

# 目的変数用のカラム

objective_column = "label_in1"

# 説明変数と目的変数をモデル作成用のインスタンスへセット

lgbm_model_manager.set_feature_and_objective_columns(

feature_columns, objective_column)

# 目的変数の作成: 1着のデータに正解フラグを立てる処理を実行

df = lgbm_model_manager.add_objective_column_to_df(df, "label", 1)

11-4.データセットの作成¶

dataset_mapping = lgbm_model_manager.make_dataset_mapping(

df,

target_category=[["stallionId"], ["breedId"],

["bStallionId"], ["b2StallionId"]],

target_sub_category=["field", "dist_cat"]

)

# 上で作成したデータセットのマッピングをセットする

dataset_mapping = lgbm_model_manager.setup_dataset(dataset_mapping)

11-5.自作の損失関数を使った学習¶

自作の損失関数を使う理由としては、クラス分類に限界を感じたからである。

また、現状のクラス分類では1頭づつの分類の予測しか出さず、レースに出走する競走馬を考慮した予測結果になっていない。

そのため、出走する他の馬を考慮した学習をできるようなカスタムobjectを作ると良いのではと考えた。

作成するカスタムObjectは、KL情報量を最適化するものとする

KL情報量を使って、1着から3着までのオッズに関する勝率の分布と予測で選ばれた上位3件のオッズに関する勝率の分布の差を見て最適化することを目的としている。

なので、学習はレースごとに予測値の上位3件のオッズに関する勝率の分布を取り出し、実際の1着から3着のオッズの勝率を比べてKL情報量の勾配とヘッシアンを計算すれば良い

KL情報量によるオッズを考慮した買い目の最適化の解説は以下の記事を参考にしてください。

カスタムObjectとカスタムメトリックの作成¶

作成自体は上記で紹介した記事にあるので、そちらを参照ください。

損失関数と評価関数の計算間違いはないはずなので、流用してもらってOKです

本ソースでは、src.model_manager.custom_objects.custom_functionsにあるものを使ってます

データセットの修正¶

目的変数の形式が変わってるので、カスタムObjectで使えるように修正する

import lightgbm as lgbm

for dataset in dataset_mapping.values():

for mode in ["train", "valid", "test"]:

dfp = dataset.__dict__[mode]

dfp["odds_rate"] = 0.8/dfp["odds"]

dfp["odds_rate"] /= dfp["raceId"].map(

dfp[["raceId", "odds_rate"]].groupby("raceId")["odds_rate"].sum())

dfp[lgbm_model_manager.objective_column] = dfp["label"] + dfp["odds_rate"]

dataset.__dict__[mode]["group"] = dataset.__dict__[mode].groupby("raceId")[

"number"].rank()

dataset.__dict__[mode]["group"] = dataset.__dict__[mode]["group"].isin([1]).astype(

int)*dataset.__dict__[mode]["raceId"].map(dataset.__dict__[mode].groupby("raceId")["raceId"].count())

dataset.__dict__[mode+"_dataset"] = lgbm.Dataset(

dataset.__dict__[mode][lgbm_model_manager.feature_columns],

dataset.__dict__[mode][lgbm_model_manager.objective_column],

group=dataset.__dict__[mode]["group"].values

)

11-6.モデル作成実行¶

とくに追加したものはないので、いつも通りにモデル作成の実行

# 学習用パラメータ

# カスタムObjectとカスタムmetricを以下のように指定

params = {

'boosting_type': 'gbdt',

# 二値分類

'objective': "kl_divergence_objective",

'feval': "kl_divergence_metric",

'verbose': 1,

'seed': 77777,

'learning_rate': 0.05,

"n_estimators": 1000

}

lgbm_model_manager.train_all(

params,

dataset_mapping,

verbose=True,

stopping_rounds=25, # ここで指定した値を超えるまでは、early stopさせない

val_num=25, # ログを出力するスパン

)

for dataset_dict in dataset_mapping.values():

lgbm_model_manager.load_model(dataset_dict.name)

lgbm_model_manager.predict(dataset_dict)

11-7.モデルのエクスポート¶

モデルのエクスポートをするためには、モデルの成績を先に計算しておく必要がある

モデルの成績は以下を計算する

- 収支の計算

- 基礎統計の計算

- オッズグラフの計算

収支の計算¶

収支計算はメンテが出来てないので少し泥臭いが、以下のコードを実行して貰えればよい

現在のソースでは単勝の収支しか計算できない

bet_mode = BetName.tan

bet_column = lgbm_model_manager.get_bet_column(bet_mode=bet_mode)

pl_column = lgbm_model_manager.get_profit_loss_column(bet_mode=bet_mode)

for dataset_dict in dataset_mapping.values():

lgbm_model_manager.set_bet_column(dataset_dict, bet_mode)

# region 推論結果の確信度が学習データのそれの中央値より大きいものだけに絞りたい場合

# q = dataset_dict.pred_train[dataset_dict.pred_train[bet_column].isin([1])]["pred_prob"].quantile(0.50)

# dataset_dict.pred_valid[bet_column] &= (dataset_dict.pred_valid["pred_prob"] >= q)

# dataset_dict.pred_test[bet_column] &= (dataset_dict.pred_test["pred_prob"] >= q)

# endregion

# region 1番人気から6番人気に賭けないようにする場合

# dataset_dict.pred_valid[bet_column] &= ~dataset_dict.pred_valid["favorite"].isin([1, 2, 3, 4, 5, 6])

# dataset_dict.pred_test[bet_column] &= ~dataset_dict.pred_test["favorite"].isin([1, 2, 3, 4, 5, 6])

# endregion

# region オッズが7以上50未満にだけ賭けるようにする場合

# dataset_dict.pred_valid[bet_column] &= dataset_dict.pred_valid["odds"].ge(7)

# dataset_dict.pred_test[bet_column] &= dataset_dict.pred_test["odds"].ge(7)

# dataset_dict.pred_valid[bet_column] &= dataset_dict.pred_valid["odds"].lt(50)

# dataset_dict.pred_test[bet_column] &= dataset_dict.pred_test["odds"].lt(50)

# endregion

_, dfbetva, dfbette = lgbm_model_manager.merge_dataframe_data(

dataset_mapping, mode=True)

dfbetva, dfbette = lgbm_model_manager.generate_profit_loss(

dfbetva, dfbette, bet_mode)

dfbette[f"{pl_column}_sum"] = dfbette[pl_column].cumsum()

dfbette[["raceDate", "raceId", "label", "favorite",

bet_column, pl_column, f"{pl_column}_sum"]]

基礎統計の計算¶

基礎統計では回収率と的中率および人気別のベット回数の集計を行う

以下のコードを実行するだけ

lgbm_model_manager.basic_analyze(dataset_mapping)

オッズグラフの計算¶

オッズグラフの話は以下の動画を確認ください

モデルを評価する上で最も重要なことを話しています

dftrain, dfvalid, dftest = lgbm_model_manager.merge_dataframe_data(

dataset_mapping,

mode=True

)

summary_dict = lgbm_model_manager.gegnerate_odds_graph(

dftrain, dfvalid, dftest, bet_mode)

print("'test'データのオッズグラフを確認")

summary_dict["test"].fillna(0)

モデルのエクスポート¶

以下を実行することでモデルの成績をエクスポートできる

lgbm_model_manager.export_model_info()

11-8.性能の確認(WEBアプリ起動)¶

以下のコードを実行するとWEBアプリが起動します。

コマンドプロンプト/ターミナルを使うことを推奨します。その際は先頭の「!」は削除して実行してください。

! python ../app_keiba/manage.py makemigrations

! python ../app_keiba/manage.py migrate

! echo server launch OK

# ! python ../app_keiba/manage.py runserver 12345

「server launch OK」の表示がでたら以下のリンクをクリックしてWEBアプリへアクセス

11-9.結果¶

| モデルID | 支持率OGS | 回収率OGS | AonBOGS | |

|---|---|---|---|---|

| 1 | third_model | 0.51958 | -3.51619 | 4.13367 |

| 2 | second_model | 1.02706 | -4.55201 | 3.09785 |

| 3 | first_model (baseline) |

0.41924 | -7.64492 |

結果から、ファーストモデルに比べてサードモデルでは、加重平均的に回収率を4.13367ポイント上回っている

セカンドモデルに比べて1ポイント程度向上している

11-10.重要度の確認¶

11-10-1.サードモデルの重要度¶

import pandas as pd

lgbm_model_manager.load_model("2023second")

dfimp = pd.Series(data=lgbm_model_manager.model.feature_importance(importance_type="gain").tolist(),

index=feature_columns).sort_values()/1000

dfimp[dfimp > 0]

面白いことに馬番のnumberの特徴量が最も重要だと出ており、次に母馬であるbreedIdと騎手であるjockeyId_enと続いている。

通説としてある内枠が有利というのはあながち間違いではないというのが分かった。

11-10-2.セカンドモデルとサードモデルの比較¶

セカンドモデルの重要度はどうだったかとサードモデルでどう変わったのかを確認

lgbm_model_manager2 = LightGBMModelManager(

# modelsディレクトリ配下に作成したいモデル名のフォルダパスを指定。

# フォルダパスは絶対パスにすると安全です。

root_dir / "models" / "second_model", # セカンドモデルのモデルID

split_year,

target_year,

end_year

)

lgbm_model_manager2.load_model("2023second")

dfimp2 = pd.Series(data=lgbm_model_manager2.model.feature_importance(importance_type="gain").tolist(),

index=lgbm_model_manager2.feature_columns).sort_values()/1000

pd.concat([dfimp[dfimp > 0], dfimp2[dfimp2 > 0]], axis=1).sort_values(

0).rename(columns={0: "3rd", 1: "2nd"})

セカンドモデルはオッズを特徴量に入れているので、オッズが最も高いのは仕方ないがその次に高かった母親産駒の勝率であるwinR_breedがサードモデルでは、かなり重要度が低くなっている。

更にいうと母馬のbreedIdの方が高いという状況から、馬IDが持つ性質をみるのではなく特定の馬IDを持っているかの方が重要であると学習しているようである。

11-11.まとめ¶

今回のサードモデルはファーストモデルやセカンドモデルのような的中率を最適化するモデルではなく、1着から3着になる競走馬のオッズの勝率分布を最適化するモデルとなっている。

そのため、このモデルは買い目を最適化しているわけではないが、オッズが10倍以内だからだとか1番人気だからだとかそういったことを見ないでオッズの勝率分布を考慮して着順を予測するようにしてる。

つまり、最適化の過程でオッズもみていることから的中率と回収率の両方を考慮しているモデルと見なせると考える。

ただ、あまり正攻法とは言えないというのも課題として持っていることと、ここで使用しているオッズというのは最終オッズの値になっていることから、学習の段階でリークが起きていると見えなくもない。

そのため、学習では最終オッズと推定したオッズ値との分布差を最小にするような学習モデルを作成する方がより実態に合っていると考えるが、それは今後の発展として今回は扱わないこととする。

(いずれは必ず取り組むべき事項だと認識してるので今回は見送らせてほしい)

コメント